ICLR 2023 Notable Top 5% (Oral)

Talk

Abstract

While large-scale sequence modeling from offline data has led to impressive performance gains in natural language generation and image generation, directly translating such ideas to robotics has been challenging. One critical reason for this is that uncurated robot demonstration data, i.e. play data, collected from non-expert human demonstrators are often noisy, diverse, and distributionally multi-modal. This makes extracting useful, task-centric behaviors from such data a difficult generative modeling problem. In this work, we present Conditional Behavior Transformers (C-BeT), a method that combines the multi-modal generation ability of Behavior Transformer with future-conditioned goal specification. On a suite of simulated benchmark tasks, we find that C-BeT improves upon prior state-of-the-art work in learning from play data by an average of 45.7%. Further, we demonstrate for the first time that useful task-centric behaviors can be learned on a real-world robot purely from play data without any task labels or reward information.

Method

Conditional Behavior Transformers (C-BeT), a method for learning conditional behaviors from uncurated play data without any task labels or rewards.

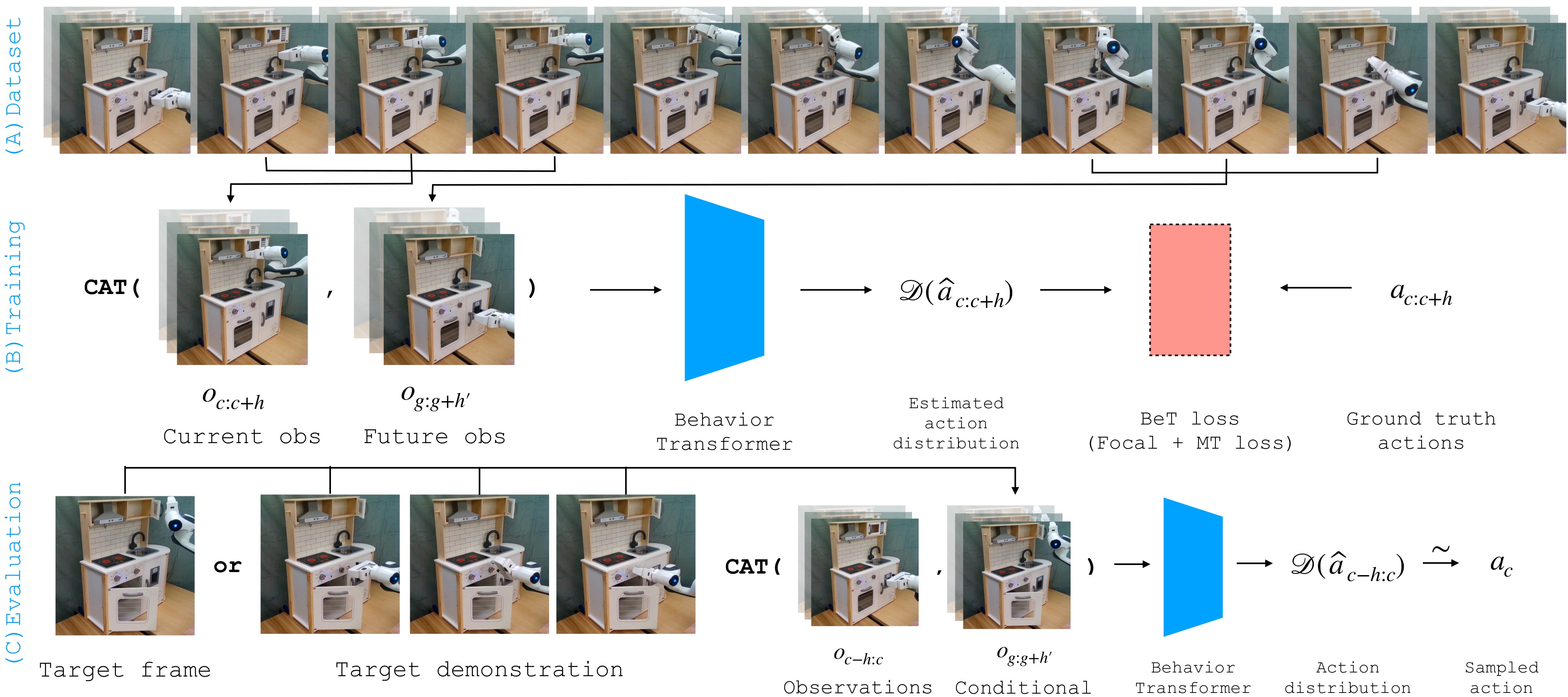

The architecture of our method is shown below. During training, we condition on current and future observations using BeT; the future observations are randomly sampled from the same episode. During evaluation, our algorithm can be conditioned by target observations or newly collected demonstrations to generate targeted behavior.

Demonstration timelapse

Real-world robot rollouts

(~30x speedup)

Conditional rollouts of C-BeT on a real-world toy kitchen robotic environment. We condition the model on a target future observation in which the task has been completed, and record a rollout timelapse synchronously from two camera views. In the last row below, we roll out the model with unseen objects added to the environment as perturbation.

Open microwave and oven doors

Put the pot in the sink

Operate the stove knobs

Open the oven door

Perturbation with unseen objects

BibTeX

@article{cui2022play,

title={From Play to Policy: Conditional Behavior Generation from Uncurated Robot Data},

author={Cui, Zichen Jeff and Wang, Yibin and Shafiullah, Nur Muhammad Mahi and Pinto, Lerrel},

journal={arXiv preprint arXiv:2210.10047},

year={2022}

}